Watching the Watchers

Passive observers are meant to watch, not slow the service. Trackers promise insight, but can often triple response times and leave players waiting.

Performance of consumer applications is as much about what you engineer inside the app as what you permit around it. Moving workloads to the cloud, improving caching, or tightening algorithms can all make a difference. But the gains can still be undone once a handful of observers crowd the kitchen.

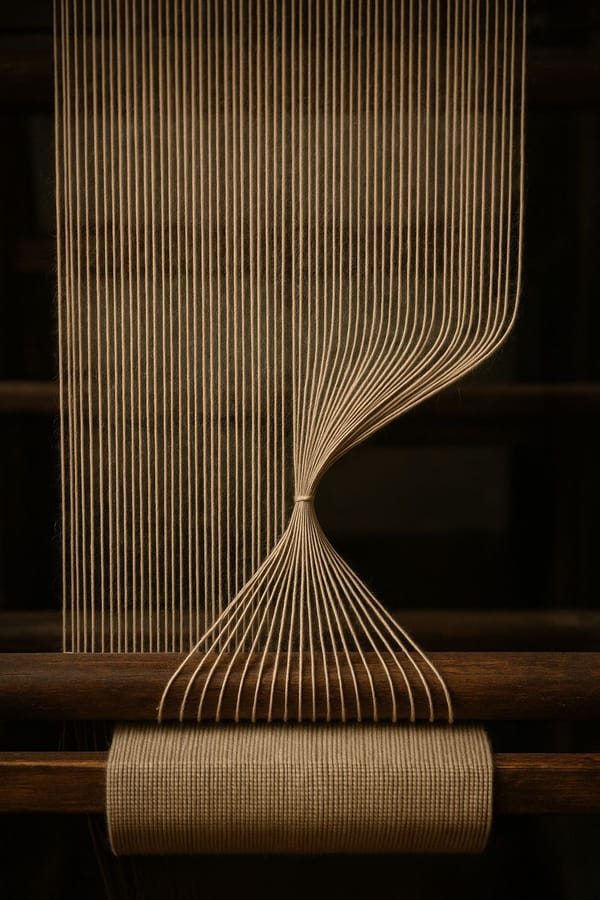

Think of a chef trying to prepare a meal. The dish would be ready quickly enough, but the kitchen is full of people in suits with cameras. They are not cooking. They are watching. They are recording. And in the space they take up, the chef cannot move freely. What should be smooth becomes clumsy.

That is what happens when you pile on code that sits outside the player’s journey. It is not needed to place a bet, deposit some money, or manage an account. Remove it all and the application still works. Yet these observers demand space and attention as if they were essential. Analytics, monitoring, replay, testing, pixels. Each one slows the service. Each one looks harmless in isolation. Together they leave the player waiting longer for the meal.

It isn’t the download that hurts, it’s what runs afterwards

Observers do not just stand quietly in the background. They run. They allocate memory, spin event listeners, intercept DOM changes, capture clicks, serialise state. On constrained devices, where performance matters most, those cycles are precious.

Consumer applications rely on finely tuned event cycles to stay responsive. The rhythm works when the application owns that cycle. Add code that listens, rewrites or interferes and the music falters. Each observer adds to the main thread’s bill. What looks like a simple metric call becomes an ongoing cost to the player experience.

Native apps are not immune. Too much monitoring or replay will slow them down too. The difference is that consumer web apps make it easier, sometimes too easy, to add just one more observer. Drop in a tag, paste a snippet, and the change goes live. Business teams come to depend on that flexibility. A/B testing tools encourage it even further, promising instant experiments without a release cycle. The bill is not paid in infrastructure but in player experience.

Why the real impact is hard to see, and harder to measure

CPU load is slippery. You can see how big a bundle is, but not how hard it makes the device work once it runs. The way a tracker hammers the garbage collector or clogs the event loop rarely shows up in the tools we use to measure performance.

That makes it easy to rationalise. It is only 20KB. It does not affect Time to First Byte. It loads after the main app. Players do not care about the loading strategy of an observer. They care that scrolling feels laggy, or that depositing money freezes for a second too long.

Tools built to watch players can end up reshaping the game itself

The irony is that many of these observers exist to measure player behaviour. Yet by their very presence they distort the very thing they claim to observe. The tools often undermine the thing they promise to improve. A session replay can stutter because of the replay recorder itself.

The burden falls hardest on the margins. Players on older Android phones, or in regions with weaker devices and networks. For them, what looks like a minor delay in profiling becomes a visible hitch in reality: a stutter, a pause, a sense that the app is not keeping up.

Built for one world, dropped into another

It is worth saying that many of these tools were designed with a different world in mind. Traditional e-commerce sites are multi-page applications. A tracker records a page view, logs a funnel step, measures conversion, then the page is torn down and a new one takes its place.

We are not running that kind of site. We are running a complex consumer application, a single-page app that stays alive for hours at a time. It maintains state, updates dynamically, and depends on smooth cycles of interaction. Most observers do not make this distinction. They treat a long-lived SPA the same as a short-lived MPA. The result is noise, duplication and overhead in places where the margin for performance is already slim.

The hidden costs of long life

These differences play out in ways that anyone can recognise. A long-lived single-page app, weighed down with observers, often develops problems that a traditional multi-page site does not. Memory leaks build up until the tab becomes unstable. Crashes appear after hours of use. Bringing the app back to the foreground takes seconds instead of being instant.

None of these issues are caused by one observer in isolation. They are the cumulative effect of watchers and trackers that were never designed to run side by side in a stateful, long-lived environment. In an MPA world, a refresh clears the slate. In an SPA, the burden just accumulates.

For technical readers: how observers throw frameworks off rhythm

Observers do not just consume cycles. They interfere with how frameworks detect change.

In Angular, for example, Zone.js tracks asynchronous events so the framework knows when to run change detection. Many monitoring tools, from RUM agents to session replay libraries, hook into the same DOM mutation and event APIs. The result is a storm of signals. Angular ends up checking components far more often than is necessary, sometimes on every keystroke or mouse move.

In practice that can double or triple the number of change detection passes during a simple interaction. A tap that should settle in around 250 milliseconds, quick enough to feel responsive, can stretch to 750 milliseconds or more once multiple monitoring tools are running. At that point the delay is obvious to the player.

It gets worse when multiple tools overlap. A replay recorder and a RUM agent both listening to DOM mutations means the same work is duplicated, often two or three times. Each serialises the same event stream, while Angular burns cycles responding to the noise. What begins as background measurement becomes foreground friction.

We have tried to mitigate. One approach is to use a library like Partytown, which moves observers off the main thread and into web workers. The effect was immediate. Responsiveness almost doubled (and in some markets tripled) compared to baseline. That is a good outcome, but also a warning. It shows just how much of the main thread was being consumed by monitoring and other injected code in the first place. By moving it elsewhere we made the player experience smoother, but the data became less reliable, arriving late or incomplete. The result is a stark reminder: these devices do not have infinite resources. If the main experience and monitoring both fight for the same cycles, the player will always feel it.

Other techniques include running code outside Angular’s zone with ngZone.runOutsideAngular, or adopting OnPush change detection to limit the surface area. These help but they are not cures. The deeper issue is structural. Tools that were never written with Angular’s runtime in mind end up clashing with it, and with each other.

The lesson is not “avoid Angular” or “avoid monitoring.” It is to treat observers as part of the runtime contract. If they are not considered at that level they will clash with the framework, with each other, and with the player.

Making the trade-off explicit rather than accidental

The easy story is to say “cut them all.” The harder truth is that observers exist for a reason. They provide evidence that helps products evolve. They give marketing teams the ability to optimise campaigns and drive new players to the product. They allow operations to prove what happened.

The danger is that when they are layered on without restraint, the value they deliver is outweighed by the friction they introduce. Insight that comes at the cost of broken flows is not really insight at all. Optimisation that makes the core journey slower is not really optimisation.

The answer is not elimination but precision. Do we need to run session replay on every player, or could we learn the same lessons from one percent? Do we need overlapping tools capturing the same events, or could we reduce duplication and still get the picture we need? Targeting smaller samples preserves the value while protecting the majority of players from the cost. Product teams still get data, marketing still drives campaigns, operations still get visibility, and players keep the fast, responsive experience they came for.

Moving what we can to the server, and leaving the rest alone

Not every observer needs to run in the player’s browser. Many of the things we collect, clickstream data, navigation flows, even A/B test assignments, can be handled server-side. That shifts the cost away from the main thread and onto infrastructure that is built to cope with it.

This is not a blanket solution. Some tools, like real-time replay, require client access to the DOM. But wherever the same outcome can be achieved server-side, it should be. Counting page views, logging transactions, deciding which experiment a player belongs in, these are all things the server can manage without slowing down the client.

It comes back to the same trade-off. Every line of code that runs on the player’s device competes with the experience they are trying to have. If the work can be shifted to the server or handled elsewhere, it should be.

Conclusion

Performance of consumer applications is not only about what we build. It is about what we allow in. Observers usually come with good intentions, but each one is still a guest in the player’s journey. And like any guest, it can either be considerate or disruptive.

Our job is to protect that journey. Not by counting bytes, but by recognising impact. Not by saying yes to every insight, but by weighing its cost. In the end, what matters is not how much code there is, but how much weight it puts on the player.

Postscript: This essay showed how every watcher takes something from the player. A sequel explores the mechanics in detail, from frame budgets to long-lived listeners to why claims of “no impact” rarely hold. Read Part 2 here.