The Tide of Review

Why code review must be predictable, humane, and measured.

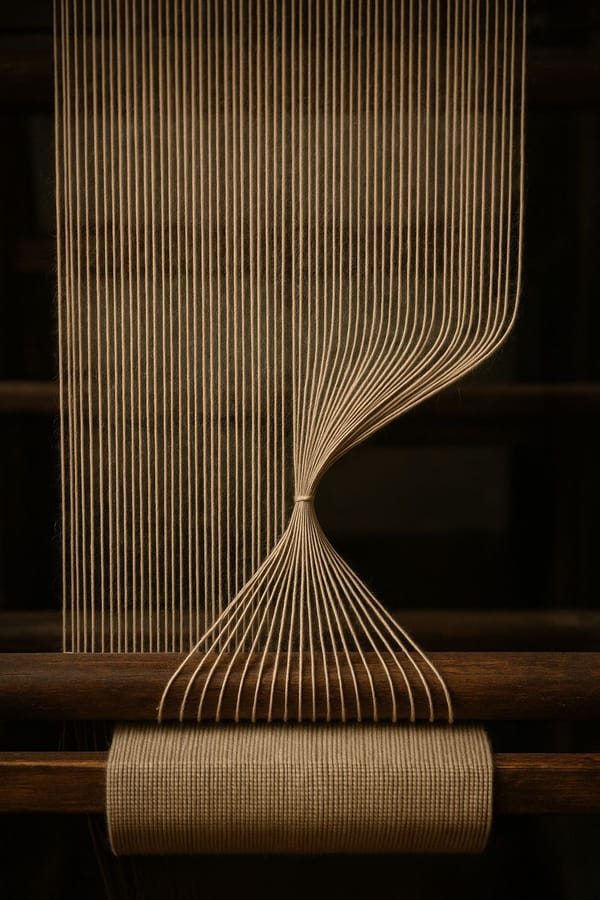

The sea moves whether you are ready or not. Twice a day the tide comes in, twice a day it goes out. The pattern is steady, the timing reliable, and over time it shapes the coast. Code review should work the same way. Without rhythm it is a scramble, with rhythm it becomes a tide.

Too often review is treated as overhead. Pull requests pile up, reviewers are pinged at random, and feedback arrives days late or not at all. The result is either rubber-stamping to unblock work or sprawling changes that stall until tempers fray. Neither is excellence. Excellence is predictable flow: work that moves at the right cadence, in the right size, with the right attention.

Mechanics: channels and flow

The mechanics of that flow begin with ownership. Google never merges a change without at least one code-owner approval1. The rule sounds bureaucratic, but it has the opposite effect. When ownership is explicit, authors know exactly who will review, and reviewers know what they are accountable for. No more guessing, no more desperate pings. Cross-cutting work can be routed with labels like “security” or “migration” that automatically summon the right specialists. Ownership gives the tide a channel.

Size keeps that channel clear. A 1,200-line pull request is a beached whale. Empirical studies show review latency grows with change size2. Google and Meta encourage small, reviewable changes, typically 200 to 400 lines, focused on one intent13. Larger work is stacked: a refactor, then the feature, then a cleanup. Each part lands quickly, each easy to review. This echoes what I argued in Modern Engineering Practices and Release Trains. Shipping small is not a trick, it is the difference between drift and control.

Automation clears the debris. Static analysis has long caught trivial issues4. AI can now summarise diffs, suggest test coverage, and flag risky areas5. None of this replaces judgement, but it removes noise so reviewers can focus on design, intent, and risk. The tide does not wait for someone to pick litter off the sand. Machines do that so the flow is uninterrupted.

Culture: rhythm and signals

But mechanics alone cannot hold the rhythm. Even the tide fails if people ignore the signals. Culture determines whether review feels like learning or punishment. Conventional Comments give reviewers a shared language: blocking:, suggestion:, nit:, question:, praise:6. Authors no longer wonder if a remark is required, and reviewers can highlight what is good as well as what needs fixing7. The tide is calmer when everyone reads the signals the same way.

Timing reinforces culture. Reviews should not trickle in as random interruptions. They should happen like the tide — twice a day, everyone knowing when the pull will come. In those windows, engineers batch their review work. Outside them, they can focus without constant pings. Some teams add a weekly “review lead” to keep the queue healthy. It is not glamorous, but it stops the drift.

Consider one team building a new search feature. They once opened a single 1,200-line pull request mixing refactor, backend queries, and UI wiring. It sat untouched for two days, then drew dozens of scattered comments. The author rewrote large chunks and merged late with defects. Under a stacked approach, the same work split into three smaller pull requests: a 200-line refactor, a 350-line backend change, and a 150-line UI patch. Each carried a clear test plan. Each was reviewed within hours during scheduled tide windows. All landed within two days, cleaner and safer. The difference was not the code. It was the tide.

Measurement: listening back

Culture sets the rhythm, but you cannot keep time if you never listen back. Measurement makes the tide audible. First-response time should be measured in hours, not days1. Time to merge and merge latency should be tracked, because studies show much delay happens after approval, waiting for integration8. Teams should see their review cycles, their pull-request size distributions, and overlay DORA metrics such as lead time, deploy frequency, change-failure rate, and time to restore9. If the numbers slip, the rhythm is off. Without measurement, the tide is invisible and the coast erodes unnoticed.

Examples show this is practical. Google trains reviewers with “readability” certification: you cannot approve code in a language until you prove you can mentor others in it1. Meta requires every engineer to state a test plan in their review submission, forcing thought about risk before anyone else sees the change10. Open-source research shows reputation and trust shorten review latency8. Different contexts, same lesson: review is not a gate, it is a flow.

The honest trade-off

Here is the honest part. None of this is free. Small changes mean authors must split their work with discipline. Scheduled windows mean resisting the urge for instant feedback. Metrics mean confronting uncomfortable truths about bottlenecks. But the alternative is worse: ad hoc reviews, random pings, mega pull requests. Drift instead of rhythm. Jam instead of tide.

If review remains ad hoc, you will always be interrupted, always juggling context, always frustrated. Design it as a predictable system — with clear ownership, small changes, shared language, tide windows, machine guardrails, and honest measurement — and it becomes the opposite of friction. It becomes the tide of modern engineering: twice a day the pull comes, work flows in and out, and over time it shapes the entire coast.

Ship small. Review fast. Be kind. Measure flow. Trust machines to catch lint so humans can catch design.

The tide waits for no one, but it carries everyone.

Footnotes

- Google Eng-Practices: Speed of Code Reviews and How to do a Code Review. ↩ ↩2 ↩3 ↩4

- Yu et al. Predicting Code Review Completion Time in Modern Code Review. arXiv, 2021. ↩

- Graphite Engineering Blog: Stacked Diffs and PR size guidelines. ↩

- Johnson et al. Static Analysis: An Empirical Survey of Industrial Usage. IEEE Software, 2013. ↩

- Barke et al. Grounded Copilot: How Programmers Interact with Code-Generating Models. arXiv, 2023. ↩

- Conventional Comments specification: conventionalcomments.org. ↩

- Atwood, J. Stop Fighting in Code Reviews. Dev.to, 2020. ↩

- Kononenko et al. Mining Code Review Data to Understand Waiting Times Between Acceptance and Merging. arXiv, 2022. ↩ ↩2

- Forsgren et al. Accelerate: The Science of Lean Software and DevOps (DORA metrics). ↩

- Phabricator/Meta Engineering Guide: mandatory “Test Plan” fields. ↩