Refactor, Rewrite, Repeat?

How AI shifts the balance between improving and starting over.

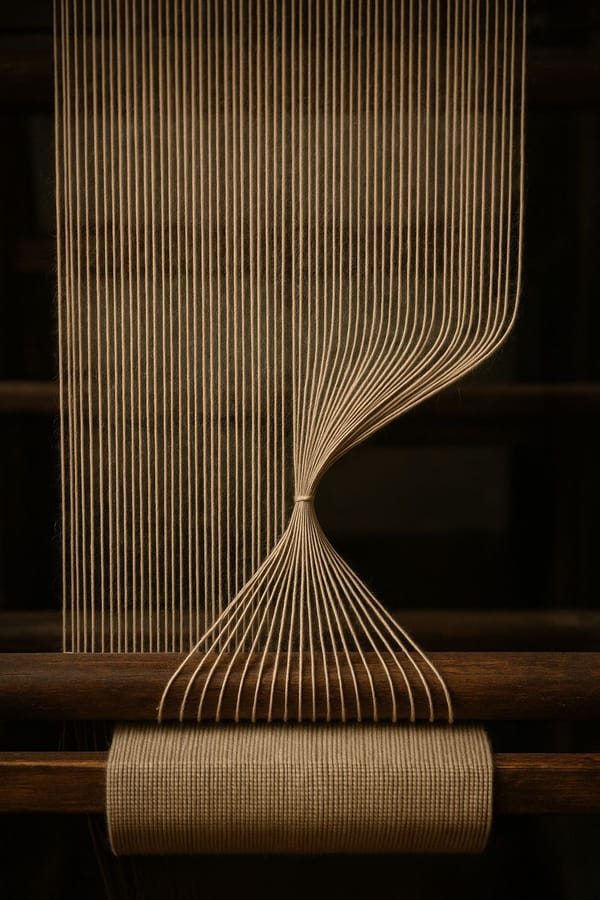

A garden rarely needs razing to the soil. Beds can be reshaped, weeds pulled, and plants lifted and replanted. The temptation to clear everything and start fresh is strong, especially when paths are overgrown and the planting has grown tangled, but razing also strips away the nutrients in the soil that took years to build.

Netscape Navigator is the cautionary tale. Faced with a codebase they considered beyond repair, the company paused, threw it all away, and began again. By the time the rewrite was ready, the market had shifted. Microsoft kept iterating. The result is well known.

The lesson is not as blunt as “never rewrite.” After years of compromises and mounting debt, a fresh start can look reasonable. At times it is. What has shifted is the balance of those choices.

Many of the hardest parts of refactoring are now tractable with AI. Tests can be scaffolded, dead code removed, and migration plans drafted in days rather than months. More than that, the work can become continuous. Code can be scanned, tickets generated, fixes proposed, even errors from real user monitoring surfaced automatically and used by AI to generate bug fixes. This is already edging into reality today, not a distant prospect. Refactoring turns from an extraordinary effort into part of the rhythm.

The same loop can extend beyond hygiene. The principles set out in As Fast As It Feels - continuity, seamless state, polished transitions, perceptual speed - can be applied directly to a codebase. AI agents could scan for violations, raise tickets, and link them to the relevant components. Another agent could pick them up, generate fixes, and run them through CI. Frustrations that once devalued a system can be codified and channelled into improvement. Continuity no longer means tolerating flaws; it means using them to drive progress.

AI also lowers the barrier to rewriting. A new system can be scaffolded quickly, with content migrated and domain rules mined from the old code. The difference lies not in what is possible, but in what follows.

Economically, rewriting almost always means running two tracks at once. The old system still carries players while the new one takes shape, and the overlap usually lasts longer than imagined. Refactoring avoids this split by folding improvements into what is live, keeping focus single-threaded.

From a risk perspective, a rewrite is an all-or-nothing bet. Success may bring a fresh foundation, but failure can set back years of effort. Refactoring moves in smaller steps, each reversible if it proves wrong. The downside is limited, the upside accumulates.

From a human perspective, existing systems carry knowledge that is easily lost. However untidy, they embody years of quirks, regulations, and edge cases. Some can be surfaced by tools, but not all. Refactoring keeps that knowledge close and, by delivering improvements into the live product, gives teams visible wins. Shorter loops encourage engineers to tidy as they go. Visible results sustain both momentum and morale.

The trade-off is not between progress and stasis but between continuity and reset. Refactoring compounds improvements into the running product. Rewriting resets the clock. There are moments when a reset is necessary, especially if the architecture is broken beyond repair or the product’s shape has moved too far from its foundations. Yet those moments are rarer, and they deserve more scrutiny than they once did.

AI lowers the barrier for both rewriting and refactoring. Only one of those paths preserves the soil that has been enriched season after season, while continuing to deliver new growth to the players already in the garden. The choice is less about technology than about momentum. Stop, and the market moves on. Keep improving, and the product moves with it.